Research

Research Aims

Fundings (Past to Present)

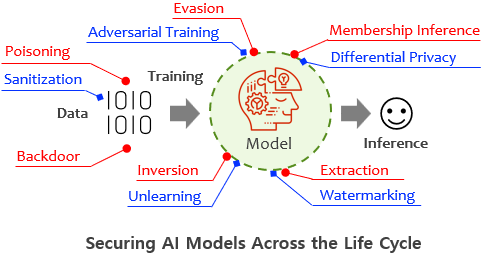

[12] Research Laboratory for Modular AI Watermarking for Generative AI Compliance, Supported by NRF (National Research Foundation of Korea); A joint project led by Jonguk Hou at Hallym University.

[11] Binary Micro-Security Patch Technology Applicable with Limited Reverse Engineering Supported by IITP (Institute of Information & Communications Technology Planning & Evaluation); A joint project led by Jooyong Yi at Ulsan National Institute of Science and Technology (UNIST).

[10] Development of Integrated Platform for Expanding and Safety Applying Memory-safe Languages Supported by IITP (Institute of Information & Communications Technology Planning & Evaluation); A joint project led by Yuseok Jeon at Korea University.

[9] Proofs and Responses against Evidence Tampering in the New Digital Environment, Supported by IITP (Institute of Information & Communications Technology Planning & Evaluation); A joint project led by Hyoungkee Choi at Sungkyunkwan University (SKKU).

[8] AI Platform to Fully Adapt and Reflect Privacy-Policy Changes, Supported by IITP (Institute of Information & Communications Technology Planning & Evaluation); A joint project led by Simon Woo at Sungkyunkwan University (SKKU).

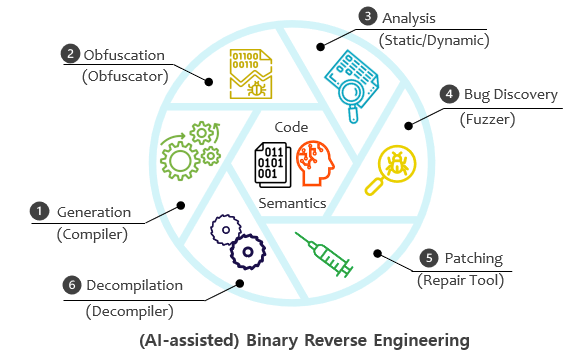

[7] A Generalizable and Continual Deep Learning Model for Inferring the Context of Binary Codes, Supported by NRF (National Research Foundation of Korea).

[6] Code Unpacking Intermediate Representation Conversion, Supported by NSRI (National Security Research Institute).

[5] A Metric to Measure the Quality of Decompiled Codes, Supported by NSRI (National Security Research Institute); A joint project led by Sungjae Hwang at Sungkyunkwan University (SKKU).

[4] Efficient Fuzzing for Internal Communication Protocols with Firmware of Unmanned Vehicles, Supported by NSRI (National Security Research Institute); A joint project led by Daehee Jang at Sungshin Women’s University.

[3] Semantic-aware Executable Binary Code Representation and its Applications with BERT., Supported by NRF (National Research Foundation of Korea).

[2] Autonomous Car Security as part of Advance Research and Development for Next-generation Security., Supported by IITP (Institute of Information & Communications Technology Planning & Evaluation); A joint project led by Yuseok Jeon at Ulsan National Institute of Science and Technology (UNIST), Haehyun Cho at Soongsil University, and Dokyung Song at Yonsei University.*

[1] Vulnerability Analysis on IoTivity Protocol Provisioning., Supported by NSRI (National Security Research Institute); A joint project led by Hyongkee Choi and Jaehoon Paul Jeong at Sungkyunkwan University (SKKU).